With their ability to generate human-like responses, large language models have revolutionised generative artificial intelligence (AI).

These models, trained on vast datasets, have demonstrated remarkable abilities in various tasks, from composing poetry to coding.

However, despite their capabilities, large language models are not without limitations.

One of the most notable challenges we face when using them is the dependence on the data on which they were trained.

While they can generate responses based on a massive amount of information, they are limited to what they have “learned” during training.

This becomes particularly evident when they’re tasked with providing up-to-date information or responding to queries outside of their training data’s scope.

To help bridge this gap, a technique known as prompt engineering has emerged.

In this blog, we explore how AI prompt engineering has become a critical tool in the developer’s arsenal, enabling LLMs to move beyond their training limitations and offer more contextually aware outputs.

What Is Prompt Engineering?

When working with generative AI, especially with large language models (LLMs), the input or “prompt” we provide is critical. It’s not just about asking a question or giving an instruction; it’s also about how you do it.

Here are the core elements that make up prompt engineering:

Prompt: A prompt is the input a prompt engineer gives to an AI model. It’s a question or statement that guides the model in generating a response. By carefully designing the prompt, engineers can steer the AI towards generating responses that are relevant and aligned with the desired outcome.

Completion: Completion is the AI-generated text that follows a prompt. It’s the AI’s contribution to the dialogue, aiming to continue, answer, or elaborate on the information provided in the prompt.

Context Window: The context window is the frame of reference the AI has when generating a completion. It’s the amount of information, measured in tokens, that the model can “see” at any given moment.

With prompt engineering, a prompt engineer will strategically structure the prompts we feed into generative AI tools to guide them towards more accurate and contextually appropriate responses.

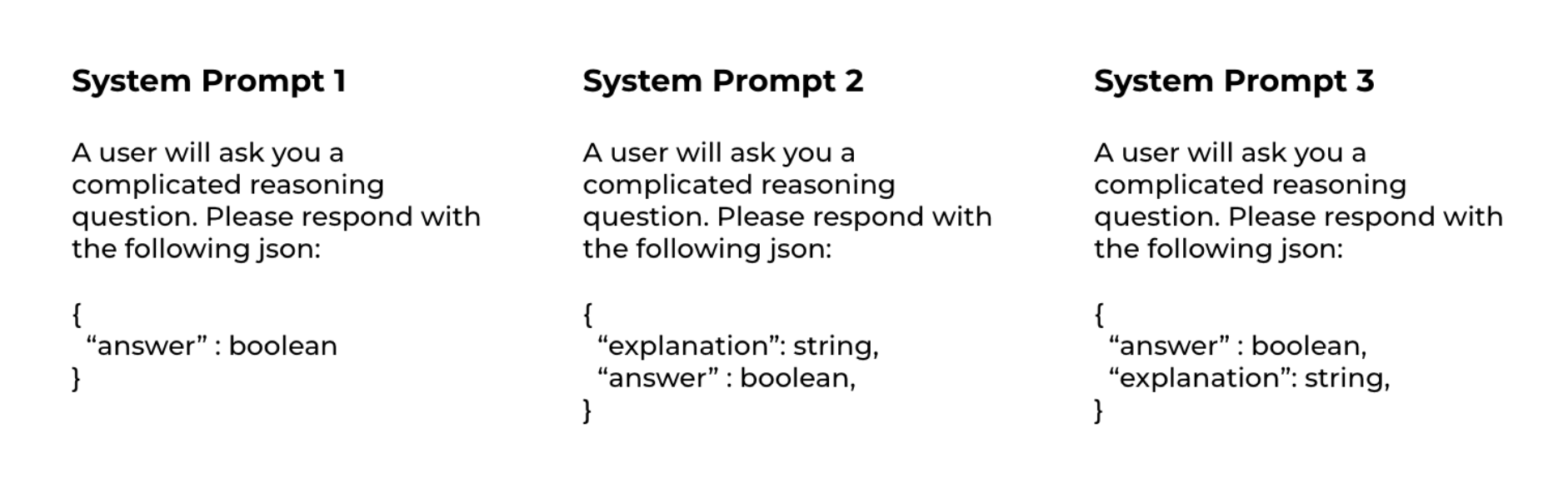

Take a look at the image below, which showcases a few examples of different system prompts we designed for a reasoning task:

These prompts were part of a quiz we used to illustrate a simple yet powerful prompt engineering technique:

- In System Prompt 1, our prompt engineers ask the AI for a straightforward answer—a boolean value, true or false.

- In System Prompt 2, our prompt engineers request an explanation followed by an answer.

- In System Prompt 3, our prompt engineers reverse this order, asking for an answer and then an explanation.

Our experience in prompt engineering has shown that System Prompt 2 typically delivers the best results from the language model.

By asking for an explanation first, the generative AI is forced to “think” through the context before landing on an answer.

This illustrates how critical the role of prompt engineering is in leveraging AI’s capabilities. When you understand the model’s mechanics—how it predicts the next word or phrase based on the given prompt—you can develop prompts that encourage more comprehensive and context-aware responses.

💡 Prompt Engineering vs. Natural Language Querying

While natural language querying and prompt engineering are both integral to AI and natural language processing, they serve different purposes and are used in different contexts.

Prompt engineering involves crafting structured prompts to guide the behaviour or output of a large language model to extract more accurate or contextually relevant responses.

Natural language querying is designed to be accessible and user-friendly, allowing non-technical users to interact with systems using everyday language.

How context windows impact prompt engineering

In prompt engineering, a context window refers to the amount of information large language models can consider at one time when generating a response.

Initially, these models could only consider around 4,096 tokens—roughly the equivalent of 3,000-4,000 words. However, as models have evolved, they now boast much larger context windows, with some (like Google’s new LLM) stretching into the millions of tokens.

However, there is a caveat—more data doesn’t always mean better. While larger context windows can enable more comprehensive responses by considering more information, they can also introduce challenges.

If you give large language models too much information, they might overlook key details. On the other hand, too little relevant context and the AI model might not have enough to go on, leading to a less accurate or relevant response.

Prompt engineers can manage these context windows to provide just the right amount of information—enough to guide the generative AI model, but not so much that it becomes overwhelmed or distracted.

💡 Latent Knowledge vs. Learned Knowledge

Latent knowledge refers to the information that a generative AI model has inherently learned from its training data. It’s the vast amount of facts, concepts, and language patterns the model has been exposed to during training.

Learned knowledge, on the other hand, is information the AI model gathers during its interactions post-training. It’s the specific details and context provided within the prompt or through additional tools that supplement the model’s latent knowledge for a particular task.

Tools We Use to Improve AI Models Through Prompt Engineering

While LLMs are adept at processing and generating text based on patterns they’ve learned, they have limitations—particularly when it comes to tasks requiring up-to-date information, domain-specific knowledge, or complex calculations.

At MOHARA, we recognise these limitations and have honed our prompt engineering techniques to ensure that the LLMs we use can perform more effectively across a broader spectrum of tasks:

LangChain

LangChain is an open-source framework created to streamline the development of applications that leverage the capabilities of LLMs. It offers a set of modular tools, APIs, and components that are integral for the easy construction of LLM-powered applications.

These tools can range from simple calculators to more complex systems capable of querying databases, interfacing with web services, or performing specialised computations.

💬 Dylan Young, Software Engineer at MOHARA

Latent knowledge refers to the information that a generative AI model has inherently learned from its training data. It’s the vast amount of facts, concepts, and language patterns the model has been exposed to during training.

Learned knowledge, on the other hand, is information the AI model gathers during its interactions post-training. It’s the specific details and context provided within the prompt or through additional tools that supplement the model’s latent knowledge for a particular task.

Chain-of-thought prompting

Chain-of-thought is a prompt engineering technique where the generative AI is guided to articulate a step-by-step reasoning process behind its answers.

It’s particularly effective for complex reasoning tasks that require detailed explanation or logical progression, and is often found in interactions with larger generative AI models.

While chain-of-thought prompting can enhance the model’s performance on complex tasks, its impact on a simple prompt is generally negligible. This is because simpler tasks do not require intermediate steps where the model might benefit from breaking down the logic.

Retrieval-augmented generation (RAG)

RAG combines the generative power of language models with real-time data retrieval from external sources.

Essentially, it allows generative AI to access a vast pool of information beyond its initial training set, so it can provide responses that are contextually relevant and up to date.

By incorporating RAG into a language model, developers can create applications that do not need constant retraining to keep up with new information.

Prompt templates

Prompt templates are pre-designed structures that standardise the input format for AI interactions. They are particularly useful when you want the generative AI to assume a specific role, achieve a certain goal, or follow a set of instructions.

By creating a template, developers can control how the generative AI model approaches a task, often leading to improved performance and a more predictable desired output.

Here’s an example of a prompt template for a language model:

| Role: Tell the AI who it is | “You are an expert chef, creating simple dinner recipes for students” |

| Goal: Tell it what to do | “You need to create delicious dinner recipe ideas for the user” |

| Step-by-step instruction | “Provide the cooking steps with the ingredients and the measurements for each” |

| Examples | {Insert your favourite recipe example} |

| Personalisation | Use a particular style, for example, “Provide your responses in the likeness of Gordon Ramsay” |

| Constraints | “Never suggest recipes that require peanuts” |

| Refinement | “Ask for feedback on which ingredients are not available and suggest alternatives” |

A Practical Application of Prompt Engineering at MOHARA

At MOHARA, we applied our expertise in prompt engineering to tackle a challenge one of our clients faced in the automotive industry: ensuring the accuracy of Bills of Materials (BOMs) for vehicle manufacturing.

A BOM is an exhaustive list that details every component needed to build a product. In automotive manufacturing, this list can be exceptionally long and complex, often containing thousands of parts, each with specific attributes.

Inaccuracies in BOMs can lead to production delays and increased costs, so validating them is critical. Traditionally, this involved labour-intensive manual reviews or basic database queries—methods that were not only slow but also susceptible to human error.

To address these challenges, we turned to prompt engineering techniques and employed RAG to refine our validation processes. We developed an Excel add-on that leverages an LLM to interact directly with the BOMs.

Initially, our system facilitated basic inquiries about the BOMs, like counting parts and assessing validation percentages.

However, we soon expanded its capabilities to include more complex queries, leveraging the LLM’s ability to understand and process the semantics of the BOM data.

This proved invaluable for identifying components listed under various names or descriptions—situations where traditional methods might falter.

For instance, being able to tell the difference between a “right-hand side steering wheel” and an “orange steering wheel” requires a nuanced understanding that our generative AI system could provide, thanks to the prompt engineering techniques we employed.

Additional capabilities from our experiments included:

- Create logical rules by talking to a person over one or many rounds of conversation.

- Create the code to test those rules on the BOM.

- Natural language questioning on a dataset.

- Compare parts of the current BOM to a historic reference to show content and attribute differences.

- Link a fleet’s vehicle specifications to the BOM and test logical rules on it.

- Interpret tree charts and summarise variant configuration issues, and check if an issue is logical based on engineering and product context.

- Interpret variant configuration codes and go between them and natural language interpretations, and use this in logical rules to test.

- Detect anomalous links between variant configuration codes and part commodities.

- Deconstruct the meaning of part numbers.

- Infer part attribute rulesets from past data.

- Testing part attribute rules on the BOM.

- Create “Nightletters” and project status summaries.

- Guardrails to stay on the BOM topic.

- Do all this using a secure and data policy-compliant method.

If you want to enhance your AI’s contextual understanding, streamline your data processing tasks, or need tailored AI solutions for your industry, get in touch with us to explore how MOHARA can help drive your business forward.